Dexta Robotics are looking to create the next generation VR input device, one that not only lets you interact with virtual objects but also lets you sense the size and solidity of them, letting you touch virtual reality. This is the Dexmo exoskeleton glove.

The technology that enables the core experience that comprises virtual reality is progressing quickly, but as VR headsets become more advanced companies are looking to where the next generational leap in VR will come from. Arguably, that next advance will probably involve input. By the end of the year, we’ll have three major VR platforms with motion controllers paired with their systems, with the HTC Vive already providing out of the box Lighthouse-tracked controllers, Oculus’ Touch set to launch by the end of the year and PlayStation VR’s Move controllers set to see a resurgence alongside the headset’s launch in October. So what’s the next step for VR?

If you’re a long time reader of Road to VR, you may recall Dexta Robotics. They’re the company behind Dexmo, a robotic exoskeleton glove that provides force feedback to simulate the act of touching objects in virtual reality. Dexta believe that this advanced form of haptic feedback encapsulates a next step in the VR experience. Well, Dexta are back and they’re ready to show the latest version of their devices.

Dexmo works by capturing the full range of your hand motion, providing what Dexta term as “instant force feedback”. With Dexmo, the company claim, you can feel the size, shape, and stiffness of virtual objects. “You can touch the digital world.” When your virtual avatar encounters a virtual object, its physical properties are, its physical properties are encountered via Dexmo’s dynamic grasping-handling software, which then provide computed variable force feedback, the glove essentially applies inverse force to your fingers, allowing virtual objects to provide a sensation of ‘pushing back’ against your fingers, just like a real object.

That’s impressive enough, but thanks to the variable nature of the force applied, the gloves can also translate more subtle physical object properties, the company says, so you can detect the softness of a rubber duck compared to the solidity of a rock, for example. You can see some of the examples of how this works in practice in the demonstration video above. “We conducted many studies where test subjects performed tasks using Vive controller or Dexmo. Tasks such as turning a knob, grasping an odd-shaped object, playing piano, pressing buttons, and throwing a ball. As expected, no contest! Users preferred Dexmo, and reportedly enjoyed a much higher level of immersion.”

Right now, the Dexmo works in tandem with two Vive controllers strapped to the underside of the user’s arms to provide positional tracking of your hands in VR; that tracking is something the company is looking to integrate properly at a later date, either via Lighthouse tracking licensing, recently opened up to developers by Valve, or another method yet to be decided.

![dexmo-prototype-1]()

![dexmo-prototype-2]()

![dexmo-prototype-3]()

![dexmo-prototype-4jpg]()

![dexmo-prototype-5]()

![dexmo-prototype-6]()

![dexmo-prototype-7]()

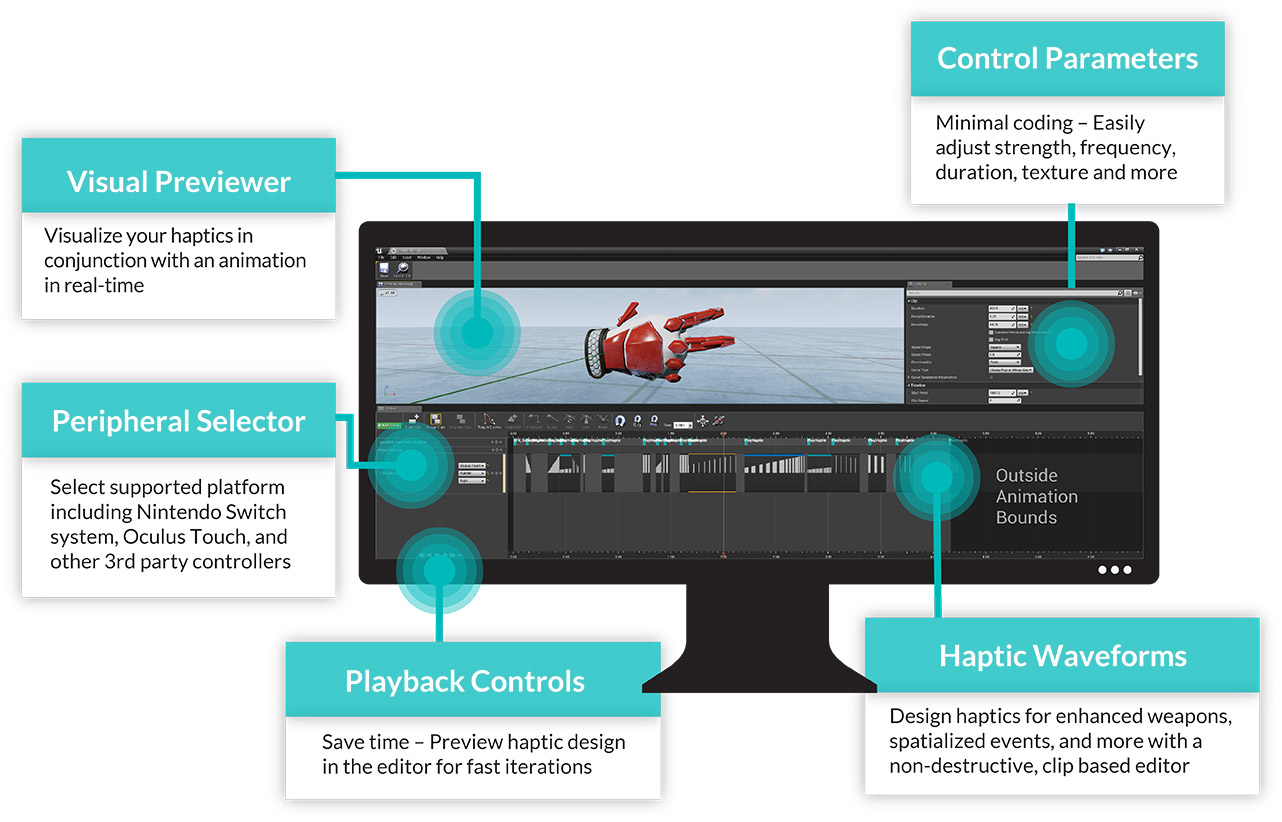

In terms of software integration, Dexta provide an SDK which allows developers to assign physical property values to virtual objects, so that the VR experience can send that data to the battery powered gloves, wirelessly communicating with the host via NRF, with Dexta citing Bluetooth and WiFi as “too slow” for their needs, claiming their solution achieves around 25-50ms of input latency.

Dexta are now ready to show the latest version of the Dexmo, which they believe now represents the core of what they intend to offer in a final device; this latest prototype can be seen in the images and videos in this article.

We wanted to dig a little deeper into the history and future of Dexmo, so we spoke to Aler Gu, CEO of Dexta Robotics.

Road to VR: The Dexmo has come a long way since we first saw it. Can you outline the some of the key milestones you’ve passed?

Gu: Indeed Dexmo has came a long way. Ever since Oct 2013 when we initiated Project Dexmo, we haven’t stop improving it:

- In June 2014 we came up with the design of “switching force feedback”, proved the concept and filed a US patent for it.

- In Oct 2014 we launched and then canceled the KS project. But we found our way of gathering capital after that and pushed Dexmo into Manufacturing afterall.

- In June 2015 our experiments of the “variable force feedback” design along with some other force feedback attempt was proven to be working. We filed several patents to protect the IP as well.

- In Feb 2016 our force feedback unit was successfully batch manufactured and then assembled into Dexmo. It was a big deal for us because the FFU was designed from scratch and it took a lot of effort to go from sth that “barely works” to a “manufacturable reliable product”

- In June 2016, we finished multiple Unity Demos to demonstrate what Dexmo is capable of. And our SDK was finally finalized.

Road to VR: Your promotional video predicts that Dexmo will be beneficial in various industries, but I wonder if you have your eye on a consumer device? How much would such a consumer product cost?

Gu: It’s not the right time to talk about price now. Before actually selling Dexmo to consumers, we have to make sure it works right out of the box. So our plans for now is partner up with talented VR developing firms and deliver the best immersion experience possible. So when people get their Dexmo, they would immediately realize how game-changing this innovation is. We are also interested in talking to the leaders of the VR/MR industries to see possibilities of in-depth cooperation.

Road to VR: You’re using the SteamVR controllers to provide positional tracking. What are your plans for tracking on the final devices? Would licensing Lighthouse tracking from Valve be something you’d look at?

Gu: It’s funny two years ago we have to explain to people “tracking is a solved problem”, and nowadays thanks to Valve we no longer need to do that anymore. People get it. It is very easy to integrate the lighthouse tracking system into Dexmo, and we will definitely look into that. What you see in the video already projects what can be done.

Beside Lighthouse, Dexmo can also work with any tracking methods. For example we tried to use the tracking coordinates that Hololens API provided, and that doesn’t even require the setup of lighthouse. I guess what I want to say is: The problem that Dexmo solved is hand tracking with integrated force feedback, tracking can come from anything because there are a lot of options for developers to choose from.

Road to VR: How much do the current units weigh and what’s the current battery life? Do they use Bluetooth, WiFi?

Gu: Nothing is definite though but I can give you an idea: Dexmo weighs slightly more than an iPhone 6 Plus [circa 172g], and can be made even lighter. The battery can ideally run over 4 hours when fully charged. It uses NRF modules for wireless communication. Wifi and BT was too slow for our applications.

Road to VR: How simple is Dexmo to develop for? For example, if a developer takes your SDK and add Dexmo integration, what work is involved in making this happen?

Gu: Dexmo is very developer friendly and well documented. With our SDK, libDexmo, any developer should be able to pick up Dexmo in a few hours after reading the documents. We built some Unity plugins that is somewhat similar to the Vive. Developers can just pull out a pair of hands and our grasping algorithm will take in place and automatically handle the grasping for them. Frankly all they need to do is to apply the colliders and stiffness to objects.

Road to VR: Can you give us some of your reasoning behind the cancellation of your 2014 Kickstarter campaign for the Dexmo?

Gu: What we demonstrated on KS back in 2014 was only a concept. When we saw people’s reaction, we were flattered. But we soon realized they expected a lot more than what we could deliver at that time. Most backers wanted a all-in-one final product that they could immediately use, with complete platform support. Back then VR wasn’t as established as it is now. We know that if people didn’t have the knowledge to even set up a tracking system themselves, they probably gonna have a bad time using it. So we actually needed way more than $200k to research and manufacture the product to meet people’s (unrealistic) expectations by then.

So there was a decision for us to make: Either we take the money, pretend none of these issues exist, lie to our backers then deliver a shitty product that nobody is satisfied with (or just not deliver at all like the ControlVR, what a laugh); Or to be responsible, do the right thing, commit to the challenges instead of just talking about it, and come back when we actually have a better product.

Being an engineer, I am not much of a talker. I believe a product will speak for itself. I hope people can feel the time and effort we put into it. It was a tough choice by then, but I am glad we made the right decision. And today, I am happy to say that this technology finally meets every expectation I had for it.

Thanks to Aler Gu for his time. If you’re keen to know more about Dexmo, we’re hoping to bring you hands on experience with the devices soon. In the meantime, check out Dexta Robotics’ website for more information.

![]() The latest Gloveone prototype integrates its own tracking system which utilizes IMUs arranged along each finger and along the user’s arm and torso. The result is tracking for not just your fingers, but for your entire arm and torso as well.

The latest Gloveone prototype integrates its own tracking system which utilizes IMUs arranged along each finger and along the user’s arm and torso. The result is tracking for not just your fingers, but for your entire arm and torso as well.![]() The haptics on the Gloveone prototype were functional but didn’t quite sell me on the sensation. The glove uses small vibrating motors at the tips of each finger to give the feeling that you are touching objects in the virtual world. The problem is, when I grab a bottle of water in the real world, I feel pressure on my fingers, not vibration—there’s a mismatch between what my brain is expecting to feel and what it actually feels. Granted, there were a few effects—like the jet exhaust from a hovering drone—where the vibrations did create a plausible sensation. Castillo told me that the particular demo that I experience was made to show the tracking and not so much the haptics. He said that other demos the company has built show a wider range of haptic effects, but I unfortunately didn’t have time to dig into them.

The haptics on the Gloveone prototype were functional but didn’t quite sell me on the sensation. The glove uses small vibrating motors at the tips of each finger to give the feeling that you are touching objects in the virtual world. The problem is, when I grab a bottle of water in the real world, I feel pressure on my fingers, not vibration—there’s a mismatch between what my brain is expecting to feel and what it actually feels. Granted, there were a few effects—like the jet exhaust from a hovering drone—where the vibrations did create a plausible sensation. Castillo told me that the particular demo that I experience was made to show the tracking and not so much the haptics. He said that other demos the company has built show a wider range of haptic effects, but I unfortunately didn’t have time to dig into them.

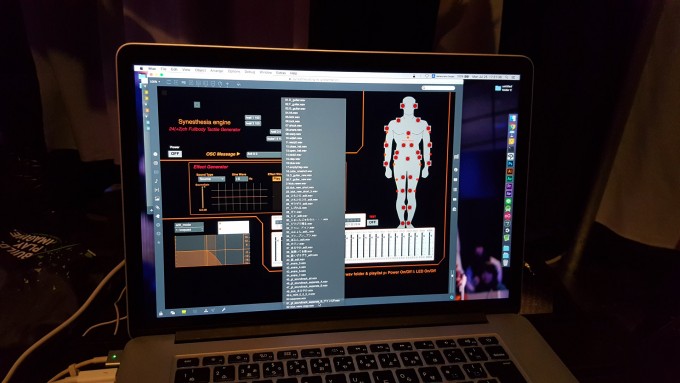

Deep in the basement of the Sands Expo Hall at CES was an area of emerging technologies called Eureka Park, which had a number of VR start-ups hoping to connect with suppliers, manufacturers, investors, or media in order to launch a product or idea. There was an early-stage haptic start-up called

Deep in the basement of the Sands Expo Hall at CES was an area of emerging technologies called Eureka Park, which had a number of VR start-ups hoping to connect with suppliers, manufacturers, investors, or media in order to launch a product or idea. There was an early-stage haptic start-up called